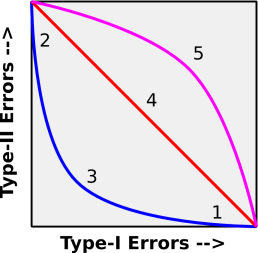

Figure 1: Receiver Operating Characteristics

As part of a discussion of primary versus secondary sources of information, the following question came up:

Suppose we want to look for terrorists trying to blow up airplanes. Is it "unscientific" to concentrate attention on a 20 year old Arabic male traveling one way carrying a backpack? These are secondary characteristics.

The answer yes, it is unscientific. And it is a really, really bad idea. All you will accomplish thereby is

Let’s discuss this in more detail. The options are:

Actually option (3) is a family of options depending on a "threshold" parameter. If you choose a super-permissive threshold it becomes equivalent to option (1), and if you choose a super-restrictive threshold it becomes equivalent to option (2). For intermediate values of the threshold we must examine the ROC (receiver operating characteristic). On a ROC graph, one axis represents false positives while the other axis represents false negatives. Draw the curve, parameterized by the threshold. Some examples of what the curve might look like are shown in figure 1.

In the figure, point 1 is a lousy operating point, because there are too may type-I errors. On the other edge of the same sword, point 2 is also a lousy operating point, because there are too many type-2 errors. Point 3 is a happy medium, with relatively few errors of either type. We can move along the blue curve by changing the decision threshold. The blue curve represents a good algorithm, because it is possible to find a good operating point on this curve.

In figure 1, the red line on the diagonal represents something that in a sane world would be the worst possible algorithm. It is uninformative. It is no better than rolling the dice and making a decision without looking at the data.

In figure 1, the magenta curve, the upper curve, is in some sense worse than the worst possible curve. It makes more mistakes than completely uninformed random guessing. Ironically, if you had such an algorithm, you could put it to good use, if you were wise enough to do the exact opposite of whatever it told you to do.

One of the biggest mistakes a strategist can make is to underestimate the enemy. Most "profile" strategies make this mistake. Unless the enemies are incredibly stupid, they will figure out how the profile works and find a way to "game the system". Specifically: a suicide bomber is not going to show up at the screening point wearing a keffiyeh and a long beard.

As a result, the profiling option can easily produce a ROC that is worse than the worst sane possibility, worse than uninformed random guessing. Everybody who shows up with a beard and keffiyeh will be a good guy (false positive), and and every bad guy who shows up will not get detected (false negative). You lose coming and going. The hardship imposed on the population is large is enormous compared to the hardship imposed on the bad guys.

In such a situation, you would be better off either:

To summarize: assuming non-extremal threshold settings and non-stupid enemies, profiling has

There are other options that work better than anything so far mentioned, but they don’t work very well. That’s a topic for another day.

Also: I predict that the next major atrocity won’t be a bomb on an airliner. I hesitate to make such predictions. On 6 March 1995 I asked a security committee why they were fixated on bombs to the exclusion of poisons, and airliners to the exclusion of office towers and subways. They rolled their eyes patronizingly. They assumed I was naïve or daft or both.

Two weeks later they were really, really angry. They wanted to burn me for a witch. (If you’re wondering why, see reference 1.)