Figure 1: Ionization Energy Trends

I was asked to comment on the Physics Standards Test (reference 1). It is part of the California Standardized Testing and Reporting Program.

Executive summary: The main issue has to do with the understanding the process, and fixing the process. How did the system get to be so broken as to produce questions like this? How can we fix it?

As a tangentially-related minor point, we note that the released questions have many flaws. (Details can be found in section 2.) That’s a minor point, because you presumably already knew that. Anybody with rudimentary physics knowledge and teaching ability could see that in an instant. It is not the purpose of this document to quantify just how awful this test is, nor to see how/whether we can improve specific test-questions.

An informative overview of the existing process can be found in reference 2.

The test is called a “Standards Test” but the standardization is not very effective. At the beginning of reference 1 one finds a list of standards, but I see no evidence that the questions uphold those standards.

For that matter, the relevance and even the correctness of the stated standards is open to serious question. This is discussed in section 4.

I am informed that the taxpayers paid ETS (and possibly some other companies) to produce these questions. Well, they should demand a refund. Whoever prepared these questions should be disqualified from doing so in the future.

More generally, whoever prepares questions in the future should provide, for each question, a brief statement as to its rationale. That is, they ought to tell us what the question is supposed to test for. Also the preparer should be responsible for vetting the questions, and should provide evidence of validity and sensitivity. That is, does the question do what it is supposed to do? This includes making sure the intended answer really is better than the distractors, and that each of the distractors really serves some purpose.

It is also important to consider the standard as a whole. It is unacceptable for a question to address one narrow part of the standard, while contravening other more-important parts of the standard (notably the parts that implicitly and explicitly expect students to be able to think and reason effectively).

Similarly it is important to consider the test as a whole. There are important questions of balance and coverage, as discussed in section 3. In particular, even if the test contains some plug-and-chug questions are individually acceptable, a heavy preponderance of such, to the near-exclusion of questions that require even a modicum of reasoning, is collectively unacceptable.

Process must not be used as an excuse. That is, to turn the proverb on its head, the means do not justify the ends. It is preposterous to argue that the questions must be OK because they resulted from an established standards-based process. The unalterable fact is that the questions are not OK. Somebody has got to take responsibility for this.

I mention this because some folks involved in the process have adopted the attitude that

“If an item assesses a standard, it’s good to go on the test”.

Talk like that makes my hair stand on end! It’s a complete cop-out, i.e. it is a lame excuse for acting without good judgment, without common sense. It ignores the truism that no matter what you’re doing, you can do it badly, and sure enough, we can see in section 2 many items that badly address various aspects of the standard.

The facts of the matter are:

- a)

- The standards do not produce the test. People produce the test. These people need to take responsibility for the product.

- b)

- If it’s a good test, the standards do not get the credit.

- c)

- If it’s a bad test, the standards do not get the blame.

- d)

- It is possible to create a good set of questions, with far fewer deficiencies than we see in section 2, within the existing standards.

- e)

- There are opportunities to improve the standards in certain ways, as discussed in section 4, but doing so will not change facts (a), (b), (c), and (d).

As an example of something that has much more potential for improving the process, here’s a suggestion: Dual-source the questions. That is, have two companies submit questions.

A crucial ingredient in this arrangement is to pay each company in proportion to how many of its questions are selected for use.

If a vendor objects to such a dual-sourcing arrangement, it is a dead giveaway that they don’t have confidence in their own product. You don’t want to do business with such a vendor.

Test printing, scoring, and analysis should be covered by a separate contract, separate from composing the questions.

There is a bank of questions that are eligible to be used on California Standards Tests. Each year, after the tests have been administered, 25% of the questions are released from the bank. The released questions are not used again. For an index of all released questions, see reference 3.

It is unclear whether the released physics questions (reference 1) are representative of the bank. The released questions are so bad that one hopes they are not representative. It is astonishing to see such a high concentration of objectionable questions. Perhaps they are the culls, removed in order to improve the bank. (Releasing the culls would create the paradoxical situation where doing the right thing makes matters seem worse than they are.)

The question numbers here conform to the 2006/2007 version of reference 1.

As a result, this question could well have negative discriminatory power. See discussion of this point under question 17.

Furthermore, some skilled teachers have taught their students to adopt the policy that “human error” is too vague to be acceptable as an explanation for anything. Therefore some test-takers will reject answer A out-of-hand.

It is pointless to argue whether these are strong objections or weak objections. Instead, the convenient and 100% proper way to proceed is to re-word the question to eliminate the objections. In this case a good starting place would be to speak of an object “dropped from rest” (as opposed to merely “falling”).

This just goes to support the larger point that it is important to have a timely, systematic vetting process. Also it is important to have a process for improving questions, not just selecting questions, because choosing the best of a bad lot is unsatisfactory.

Furthermore, the stem of the question contains strange misconceptions and anomalies. One normally applies voltage (not current) to light-bulb circuits, for a number of very good reasons. (One might apply current to a magnet coil, or to the base of a transistor, but those are significantly different physical situations.) Indeed applying current to a series circuit containing a chunk of rubber might be downright dangerous. On an advanced test, I might not object to a question-stem containing obiter dicta that cause confusion, but the confusion here is so pointless and so out of balance with the chug-and-plug questions that make up the rest of the test that I have to assume it is simply another mistake, another question drawn up by somebody who didn’t really understand the subject matter.

I am reminded of the proverbial expression “all hat, no cattle” (referring of course to someone who dresses like a cowboy but has not the slightest understanding of real cows or real cowboys). This question, like the previous one, appears to have been designed by someone who likes to talk about “the scientific method” but has no idea how real science is done ... likes to talk about “physics” but has no real understanding of the subject matter ... and likes to talk about “teaching” but has no idea how real teaching is done.

In either case, this is an objectionable question.

I am informed that the stem of the question should have asked about “speed” not “velocity”. Even with that explanation, the situation is well-nigh incomprehensible.

Another question on the same topic appears on the ETS web site (reference 5), as an example of an SAT question. The SAT version is markedly better. Why did California get saddled with the dumbed-down version?

Also, this uses “order” as a stand-in for “entropy”, which is not really correct, for reasons discussed in reference 7.

I also wonder about distractor A. Although there is a somewhat-common misconception concerning the northness of north poles and the southness of south poles, I suspect anyone who is sophisticated enough to know the vocabulary word “monopoles” doesn’t suffer from this misconception. It seems as if the test-makers were trying to show off, trying to show me that they knew about monopoles, but alas doing so in a way that weakened the test. All hat, no cattle.

In conjunction with the test-question booklet, students are given a “Physics Reference Sheet” containing “Formulas, Units, and Constants”.

Among these is the formula

| Δ S = |

| [allegedly] (1) |

which is really quite objectionable, for reasons discussed in reference 7.

The released questions do not make use of this formula, but one may reasonably fear that some of the unreleased questions do.

When viewed as a whole, a well-designed test must meet a number of global requirements, such as requirements as to coverage and balance. That includes making sure that none of the important topics are skipped or unduly under-weighted.

Checking individual questions one by one is not good enough. Checking to see that an individual question is “acceptable” is nowhere near sufficient for achieving acceptable coverage and balance. The existing test-construction process pays far too little attention to coverage and balance. The individual questions, as bad as they are, are not the biggest problem. The lack of coverage and balance is a more serious and more deep-seated problem.

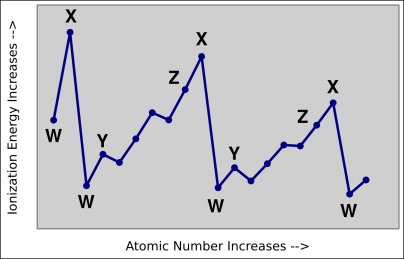

The idea of balance applies not just to individual bits of domain-specific knowledge, but also to higher-level goals. As an example of a higher-level goal, consider question 6 on the corresponding chemistry document (reference 8). In reference to figure 1 it says: «The chart ... shows the relationship between the first ionization energy and the increase in atomic number. The letter on the chart for the alkali family of elements is: ....»

This question requires knowing the definition of “alkali family” but does not ask for a rote recitation of the definition. Similarly it requires knowing the definition of “first ionization energy” but does not ask for a rote recitation. Thirdly it requires some minimal skill in interpreting a graph. Therefore this question has a special role to play as part of the overall test:

| If you are interested in testing the student’s ability to think, to combine ideas, as opposed to plugging and chugging, then this is a commendable question. The question is not difficult if you know the material, but it does require knowing the material and it also requires a multi-step thought process. | In contrast, if you aren’t trying to test for thinking skills, this question is sub-optimal, because its score is difficult to interpret: If the student gives a wrong answer, you won’t know which of the various steps went wrong. |

I am not saying that all questions should require combining multiple ideas. I am saying there needs to be a balance between checking basic factoids one-by-one and checking for higher-level thinking skills.

The alkali question has some remarkable weaknesses to go along with the strengths mentioned above. For one thing, the chart has four Ws but only three Xs, two Zs, and two Ys ... even though it would been the easiest thing in the world to add a third Y and/or remove one of the Ws. This telegraphs that W is the “interesting” thing. A student who doesn’t know the material but is test-wise will pick up on this. I cannot imagine how this weakness arose, or how it slipped through the question-selection process.Actually there are multiple excellent reasons for removing one of the Ws. Calling hydrogen an alkali metal represents the triumph of dogma over objective reality. For details on the placement of hydrogen, see reference 9.

If the released questions are representative, they indicate that the physics test has serious coverage and balance problems:

The full, official physics standards can be found in reference 10. You may wish to compare them to the standards for related subjects, such as math (reference 11), algebra (reference 12), science (reference 13), and chemistry (reference 14). There is even a standard for “Investigation & Experimentation” (reference 15).The state also provides some “framework” documents in areas such as science (reference 16) and math (reference 17) to explain and elaborate upon the standards.

The standards need to be explicit about the following:

The primary, fundamental, and overarching goal is that students should be able to think, to reason effectively. This is far more important than any single bit of domain-specific knowledge.

Clearly it couldn’t hurt to say that, but you may be wondering whether it is really necessary.

Therefore, yes, it is important to stop pussyfooting around and explicitly make “thinking” the primary, fundamental, and overarching goal.

Moving now to a lower level, the tactical level, the standards fail to mention the great scaling laws. In 1638, Galileo wrote a book On Two New Sciences. In it, he made heavy use of scaling laws. The scaling laws are simultaneously more profound, more age-appropriate, and more readily applicable than many of the topics that are mentioned in the standards.

Except for those omissions, and except for a few howlers mentioned below, the standards themselves seem reasonable. Although they could be improved, they are not the rate-limiting step. As mentioned in section 1, it is perfectly possible to make a good set of questions, much better than the questions discussed in section 2, within the current standards.

On the other side of the same coin, we should keep in mind the fact that you can’t make anything foolproof, because fools are so ingenious. No matter how good the standards are, they will never be foolproof or abuse-proof.

The corresponding “current” document is at: http://www.cde.ca.gov/ta/tg/sr/documents/rtqphysics.pdf

This analysis has been revised so that the question-numbering conforms to the 2006/2007 rtqphysics document, i.e. the one released in January 2007 and covering questions administered to students in 2006 and earlier years.