A lot of people who ought to know better think there should be 14 “f block” elements, but there is no data to support this. The set of integers from 0 through 14 inclusive has 15 members. People who cannot reliably count to 15 should not be telling other people how to do quantum mechanics. For details on this, see reference 2.

I realize this is not a major focus of the book, but it is one of those things where there is an upside to doing it right, and absolutely no downside.

However, this book jumps directly into the deep end of the pool, making assertions about «objects». This evidently includes objects of every kind, large as well as small, non-rigid as well as rigid. This leads to lots of problems, because many of the assertions are simply not true when applied to extended objects (even though they would be true if applied to pointlike particles). For example:

- On page 6, it says that in the absence of interactions an «object» will exhibit «uniform motion». Alas that’s just not true for extended objects. In reality, a lopsided object does not generally exhibit uniform motion. For a hands-on example, see reference 3.

- On page 48, it says «No matter what system we choose, the Momentum Principle will correctly predict the behavior of the system.» I’m sorry, there’s a lot more to physics than F=dp/dt ... especially for extended objects.

The other subsections in this section are OK, because they use frame-independent concepts such as uniform motion and changes in velocity.

In fact, though, Galileo clearly stated this law, many decades before Newton came on the scene. It was Galileo who made the major break with ancient tradition.

Imagine any particle projected along a horizontal plane without friction; then we know, from what has been more fully explained in the preceding pages, that this particle will move along this same plane with a motion which is uniform and perpetual, provided the plane has no limits. – Galileo Galilei (1638)

tr. Crew & Salvio

It should also be noted that this law can be understood as a corollary of Galileo’s principle of relativity; see item 9.

We can give Newton credit for setting this law at the top of a numbered list, but that’s not the same as originating the law. See section 2.5 for more about this.

As to the physics, it is wrong coming and going:

- You could equally well say that interactions are responsible for the stability of ordinary terrestrial matter, i.e. the lack of change.

- On the other edge of the same sword, consider the superposition of two wave components, each of which is a standing wave, i.e. a stationary state. The superposition will be non-stationary. It will exhibit endless change, even though there is absolutely no «interaction» between the two components.

Perhaps more importantly, this depends on some profoundly incorrect assumptions about the role of «cause» and “effect” in the fundamental laws of physics, as discussed in reference 4.

As to the pedagogy, this is the sort of thing that leads to the worst sort of rote learning, because students think that if they learn those three words verbatim, they will be OK. In contrast, if they thought about those words at all, they would realize that they cannot possibly be true.

Every math and/or physics book I’ve ever seen uses square brackets for this purpose. M&I is my first exposure to angle brackets.

In particular, from the point of view of linear algebra, the sort of vectors we’re talking about are N×1 matrices. The things we call components are the matrix elements. For a matrix of any shape, everybody writes the matrix elements within square brackets. This includes N×M rectangular matrices, including special cases such as N×N square matrices and N×1 column vectors and 1×M row vectors.

If you want to say such things are beyond the scope of the course, that’s OK ... but it is not helpful to say they are «not legal (and not meaningful)».

Shut yourself up with some friend in the main cabin below decks on some large ship, and have with you there some flies, butterflies, and other small flying animals. Have a large bowl of water with some fish in it; hang up a bottle that empties drop by drop into a wide vessel beneath it. With the ship standing still, observe carefully how the little animals fly with equal speed to all sides of the cabin. The fish swim indifferently in all directions; the drops fall into the vessel beneath; and, in throwing something to your friend, you need throw it no more strongly in one direction than another, the distances being equal; jumping with your feet together, you pass equal spaces in every direction. When you have observed all these things carefully (though doubtless when the ship is standing still everything must happen in this way), have the ship proceed with any speed you like, so long as the motion is uniform and not fluctuating this way and that. You will discover not the least change in all the effects named, nor could you tell from any of them whether the ship was moving or standing still. In jumping, you will pass on the floor the same spaces as before, nor will you make larger jumps toward the stern than toward the prow even though the ship is moving quite rapidly, despite the fact that during the time that you are in the air the floor under you will be going in a direction opposite to your jump. In throwing something to your companion, you will need no more force to get it to him whether he is in the direction of the bow or the stern, with yourself situated opposite. The droplets will fall as before into the vessel beneath without dropping toward the stern, although while the drops are in the air the ship runs many spans. The fish in their water will swim toward the front of their bowl with no more effort than toward the back, and will go with equal ease to bait placed anywhere around the edges of the bowl. Finally the butterflies and flies will continue their flights indifferently toward every side, nor will it ever happen that they are concentrated toward the stern, as if tired out from keeping up with the course of the ship, from which they will have been separated during long intervals by keeping themselves in the air. And if smoke is made by burning some incense, it will be seen going up in the form of a little cloud, remaining still and moving no more toward one side than the other. The cause of all these correspondences of effects is the fact that the ship’s motion is common to all the things contained in it, and to the air also. That is why I said you should be below decks; for if this took place above in the open air, which would not follow the course of the ship, more or less noticeable differences would be seen in some of the effects noted. – Galileo Galilei (1638)

tr. Drake

See also section 2.5.

For starters, it talks about «Einstein’s Special Theory of Relativity (published in 1905)». That is grotesquely misleading. Our understanding of relativity did not begin or end with Einstein, and it did not begin or end in 1905. There were major contributions from Galileo, FitzGerald, Lorentz, Poincaré, Michelson/Morley, Minkowski, and many many others. By far the most original and most consequential contributions were from Galileo (1638) and Minkowski (1908). See also section 2.5.

Let’s be clear: Our modern understanding of special relativity is based on contributions from many people, at least two of whom contributed far more than Einstein. Initially, Einstein downplayed Minkowski’s contribution, but before long he completely discarded the 1905 approach and relied on Minkowski’s spacetime approach for all later work, including General Relativity.

There is no excuse for sticking with the pre-1908 approach. It is more than 100 years out of date. It is more complicated, harder to explain, and harder to use. It must be completely unlearned before the modern approach can be learned. See reference 5.

|

That’s not exactly wrong, but it is unreasonable, because it is far messier and uglier than it needs to be. By “not exactly wrong” I mean that the correct physics is a solution to the equation; however, there are also innumerable unphysical situations that also satisfy the equation. We should contrast it with the modern (post-1908) way of expressing the same idea, namely

| (2) |

where p and u are four-vectors.1 This equation could hardly be simpler. Compared to equation 1, it is easier to understand, easier to explain, and easier to use.

For starters, the absolute-value bars in equation 1 are silly. They’re not exactly wrong, but they make the equation more complicated, less trustworthy, and less useful.

Note that the «p→» that appears in equation 1 is not the quite the same as the p that appears in equation 2. In fact «p→» is the projection of the four-dimensional momentum p onto the spatial directions xyz in some chosen frame. It would be better to write the LHS of equation 1 in terms of pxyz or something like that ... and even better to forget about equation 1 and write equation 2 instead.

The p that appears on the LHS of equation 2 is the full four-dimensional momentum. Similarly, the u on the RHS is the full four-dimensional velocity. As discussed in reference 5, it is defined as:

| (3) |

where x is the full four-dimensional position vector, and τ is the proper time. This equation could hardly be simpler. It is simple in its structure and simple in its conceptual interpretation.

The inverse square root that appears in equation 1 is best understood as a factor of dt/dτ, which results from choosing an unfortunate way of measuring time. The proper time τ makes things simple. The coordinate time t is the projection of τ onto some chosen basis, and it makes things seem more complicated than they really are, for instance when it is used to calculate the reduced velocity v that appears in equation 1. For details, see reference 5.

This appears to be the only mention of Bernoulli in the whole book.

Suggestion: Just describe the physics, without the misleading name. A ball is suspended in a jet of air, i.e. in a narrow fast-moving stream of air.

The check-your-work rule is the cornerstone of critical thinking.

In later chapters, the check-your-work rule should go without saying, but here in chapter 1 it would be nice to mention it explicitly.

As discussed in section 2.4, I understand that at this point in the course, there are reasonable reasons for avoiding calculus concepts and calculus notation.

I would like to make a different point, focusing on the words that talk about the equation. The book highlights «the momentum principle» and bills it as a «fundamental principle» that «applies to every possible system» ... even though the formula as stated is not correct in general. It’s hardly even meaningful unless we assume that the force is constant, which is not the general case.

Suggestion: It would be better to say something to the effect that a more precise statement of the momentum principle will be given later, implicitly on page 125 and explicitly in equation 155.22. This a manifestation of the “limitations” issue, as discussed in section 2.1.

- By far the most important “momentum principle” is conservation

of momentum. Since the book uses the term “energy principle” to

refer to conservation of energy, it seems astonishingly illogical and

inconsistent to use «momentum principle» to refer to anything other

than conservation of momentum ... especially given that energy and

momentum are part of the same four-vector.

Pretending there is only one momentum-related principle cannot be justified on any scientific or pedagogical grounds. Students who learn this weird terminology will be at a handicap when trying to communicate with the rest of the population.

- I remark in passing that momentum appears on one side of the equation and force on the other, so it would make at least as much sense to call it “the force principle”.

- On top of all that: The equation F=dp/dt already has a

name: It is known as the second law of motion ... and has

been since Day One.

LEX II. Mutationem motus proportionalem esse vi motrici impressæ, & fieri secundum lineam rectam qua vis illa imprimitur. – Isaac Newton (1687)

Similar remarks apply to «the angular momentum principle» on page 426 and elsewhere. Contrast this with item 36.

Suggestion: The LHS of the law of universal gravitation really needs to be written as δgm. It is two jumps removed from the gravitational acceleration g. In the usual terrestrial lab frame, δgm is the largest single contribution to g, but it is not the whole story. See the discussion of “various different notions of gravity” in reference 7.

Furthermore, it must be emphasized that when the book speaks of «our» usual reference frame, the authors are speaking only for themselves, not for the larger community. Throughout the physics and engineering literature, if there is a vertical axis, the near-universal choice is to call it the z axis.

- One way of looking at this is to call it an abuse of the notion of “average”. The update rule for the position uses the “average” velocity, averaged over the whole step-1 interval, while the update rule for the momentum uses the “average” force in the neighborhood of the step-0 / step-1 boundary, which is not a reasonable estimate of the “average” over the actual interval being considered, i.e. the interval shown in the diagram.

- Another way of looking at it is as an example of equation hunting. “Some” kind of force is plugged into the equation «pf = pi + F[net]Δt», but it is not the appropriate force, not compatible with the originally-defined meaning of the equation.

- Yet another way of looking at it is to call it an abuse of the

symbols, including Δ, i (for initial) and f (for final).

These symbols are being used in inconsistent ways. For example,

ri and rf refer to the “initial” and “final” positions, at

the beginning and end of the step-1 interval. In contrast, pi and

pf do not have any similar interpretation. There are various ways

to fix this, for instance by recognizing that for practical purposes

the momentum update is happening at a single point – at the boundary

between steps – and defining pb and pa, where the subscripts

b and a stand for “before” and “after”, namely before and

after the the step-0 / step-1 boundary. Another way to fix it would

be to define a staggered step, consisting of the last half of step 0

and the first half of step 1.

This illustrates the distinction between calculus and finite-difference methods. As discussed in section 2.4, the inconsistencies would go away if we were using calculus i.e. passing to the limit as Δ goes to zero. However, computers operate on finite differences, and the inconsistences affect how the code is written, and can dramatically affect the results.

If you want the students to learn by rote to write the code a certain way, that would be semi-OK ... but please let’s be upfront about it. Let’s not pretend that the code is explained by the physics, when the real explanation has not been given. Conversely, if you want the students to understand what’s going on, you have to give the actual explanation.

Let’s be clear: Suppose a student understood the diagrams and understood the words in the text – words like “step” and “average” – and wrote some code accordingly. This student would get a very much worse result than a student who wrote the code in rote obedience to the recipe outlined in the text. Neither student would understand why, and in all likelihood the teacher wouldn’t either.

The explanation is that one approach results in a symplectic integrator, while the other does not; see reference 8. There is no way to explain this in terms of the “average” force or “average” velocity, averaged over some “step”.

This is on top of the even more obvious fundamental error of disregarding the centrifugal field; see also item 32.

For the correct physics of hydrostatic shapes and surface gravity, see reference 10.

The book all-too-consistently uses reciprocity as a code-word to refer to the third law of motion. This is not helpful. All the world refers to the third law as the third law. Furthermore, in physics, recprocity already has a well-defined technical meaning.

It is important to get this right, because the third law is tantamount to conservation of momentum. There could hardly be anything more important.

At the very least, the numbers should be specified as applying to dry air.

Dry air contains roughly 78.09% (by volume) nitrogen, 20.95% oxygen, and 0.93% argon. In addition, there can easily be several percent of water vapor mixed in.

Alas the third paragraph in this section is profoundly wrong. For starters, we can tell that it is wrong because it contradicts the previous two paragraphs, insofar as it alleges an asymmetrical relationship between F and dp/dt. Furthermore, it is proof by bold assertion, with absolutely no evidence to back it up. The asymmetrical relationship is symbolized by an arrow, and also analogized to the highly asymmetrical relationship between cause and effect. Note that according to the usual definitions, a cause must precede its effect, so this breaks the equal-time property of the second law (in addition to breaking the symmetry).

For more about cause-and-effect and its relationship to the basic laws of physics, see reference 4. Compare item 16.

| Traditionally, physics has not defined force in terms of momentum (or vice versa), but rather has used independent operational definitions of these quantities. Therefore the second law is not a tautology. It tells us something we did not already know. | In principle, I suppose one could define force in terms of dp/dt, in which case we would have a tautology. The book comes rather close to doing this ... so close that the denial on page 197 was deemed necessary. |

This highlights the fact that the book does not do a very good job of laying down a solid operational definition of force ... unless I have overlooked something, something that is not mentioned in the index or the table of contents. This stands in contrast with PSSC Physics which in my opinion does an outstanding job of defining force in terms of a standard spring. It goes on to give a simple yet scrupulously principled explanation of the algebraic properties that a force must have.

To say the same thing another way, when page 197 says «Fnet and dp/dt Are NOT the Same!», that is non-constructive. It tells us what the relationship isn’t ... whereas it would be much more constructive to tell us what the relationship is.

However, the fact is that the centrifugal field is as real as the ordinary laboratory gravitational field. Both are 100% dependent on choosing a non-inertial reference frame. In particular, the centrifugal field exists in the rotating frame and not otherwise. See reference 11 for a discussion of how this works.

This contributes to getting the wrong answer in item 25.

This is particularly relevant, because people tend to identify with people, and the book presents example after example where real-world people would not naturally use the terrestrial laboratory frame, namely the people aboard the orbiting space station, the person swinging on the vine, the people aboard the airliner, the people riding the Hoffmeister rotor, et cetera. On page 203 it quite explicitly focuses on the «feeling» of the passengers. In all these situations, real-world people would be entirely within their rights to choose a rotating reference frame in which centrifugal forces are key to analyzing the situation.

It would be OK to say that rotating reference frames are outside the scope of the course ... but it is absolutely not OK to suggest that they don’t exist or are «incorrect». See reference 11.

The thing that really cracks me up is the utter futility of saying that the rotating-frame analysis is wrong. Based on years of experience such as riding in cars, students can tell by the seat of their pants – literally – that the centrifugal field exists. Even if you train them by rote to say in class that the concept of centrifugal force is wrong, as soon as they step outside the classroom they will go back to using the concept ... probably using it more-or-less correctly.

See also section 2.10.

Also note that this is inconsistent with the definition of «the momentum principle», as discussed in item 18.

Further note that on page 118, conservation of momentum is called «conservation of momentum». Similarly on page 585, conservation of charge is called «conservation of charge». This suggests that there cannot be any principled reason why conservation of energy could not be called by its proper name.

- If one student pushes another, is this positive work or negative work? It depends on which student you consider “the” system. Symmetry tells us the question is unanswerable, as a matter of principle.

- If you catch a fastball, is this positive work or negative work? You could say negative work is done on the ball by you ... or you could say positive work is done on you by the ball.

If one is not fastidious about the work-by/work-on distinction, all sorts of minus-sign errors show up. Errors affecting the sign of W can be found in the text; see section 4 and item 76.

The non-coverage of this issue is particularly glaring when contrasted with the amount of attention given to less-important issues in this section, e.g. in the subsection «Positive and Negative Work», the subsection «A Common Mistake», and the subsection «Sign of Work Depends on Direction of Force and Direction of Motion».

This issue is exacerbated by the fact that most of the thermodynamics literature adopts the opposite convention, using W to represent the work done by the engine, for obvious reasons. This means the students are likely to be confused the first time they read anything other than this book.

Tangential remark: This touches on an even deeper fundamental issue: Introductory texts tend to talk in terms of “the” system and “the” surroundings. This is unworkable in the long run. It breaks down in some quite simple systems; for instance, when one student pushes on another, symmetry makes it impossible to decide which student is the “system” and which is the “surroundings”. The breakdown is even more spectacular in more complicated situations, such as in fluid dynamics, where one must divide the fluid into many parcels, each of which acts as a “system” unto itself, even while acting as part of the “surroundings” for other parcels.

Suggestion: Be painstakingly explicit. Rather than writing simply W, write W[on gas] or W[by engine] or whatever.

As further evidence for the importance of this, consider the endless holy wars over the definition of “heat” that one finds in the PER literature.

| (4) |

On page 527, equation 527.77 expresses the same general idea:

| (5) |

Equation 527.77 appears in a section that focuses attention on an isothermal compression, so no harm would be done if the student wrote this restriction next to the equation, explicitly:

| (6) |

Equation 528.69 expresses the same idea:

| (7) |

The same symbols appear again in equation 530.26 ... but with a different meaning:

| (8) |

The same symbols appear again in equation 531.07, with yet a different meaning:

| (9) |

At this point the student will almost inevitably conclude that the unadorned equation 5 is true in general, without restriction. Evidently it was a waste of time to keep track of the limitations as shown in magenta above. The only problem is, equation 5 is not true in general! Equation 4 is not the «general» definition of work. Somebody with a solid understanding of thermodynamics would know this, but the student has no way of figuring this out.

Keep in mind that at this point in the course, the student might have studied calculus for only about half a year. According to everything we know about calculus at this level (and several levels beyond that), the RHS of equation 5 implicitly demands that the LHS be considered a function of V1 and V2. We can make this explicit by rewriting it as:

| (10) |

The problem is, equation 10 is grossly incorrect (when applied to uncramped thermodynamics). In particular, consider a complete thermodynamic cycle, in which the initial state (1) is the same as the final state (2). Equation 10 tells us that the work done by any thermodynamic cycle is zero. There is no other reasonable interpretation of this equation.

In order to understand thermodynamics we need to treat W as a functional of the entire path Γ that connects state 1 to state 2. Someone with a solid understanding of thermodynamics would write it as

| (11) |

Alas, the chance that students will figure this out on their own is virtually zero. This concept needs to be explained. Indeed it will take a lot of explaining, especially if they have never seen the concept of “functional” before, or even so much as heard the word.

Equation 5 is an impediment to understanding, because it indicates to the student that W is something it’s not. This is a conspicuous example of the “limitations” issue discussed in section 2.1.

This problem is further exacerbated by negative transference of the following kind: Students come to class with years of experience – and years of schooling – restricted to cramped thermodynamics, i.e. situations so restricted that it is impossible in principle to build a heat engine. Specifically, I’m talking about situations like warming a bottle of milk and then cooling it off again, i.e. situations where the heating and cooling are restricted to a single one-dimensional simply-connected path through thermodynamic state space. In cramped situations, you can get away with using equation 5 and similar equations that suggest that heat-content and work-content are functions of state. Students more-or-less universally assume that these equations are valid in general, which is a tremendous barrier to understanding.

Telling the students once or twice that heat-content (aka caloric) is not a function of state is nowhere near sufficient. They need a workable set of modern concepts to replace their 18th-century notion of caloric. Things like equation 5 are exactly what they do not need. Equation 11 is correct but difficult for students to understand. My point is that that replacing a difficult equation with an incorrect equation is not helpful. For details on how to do this right, see reference 12.

- On pages 301–302, «heat» is defined as «Q = energy transfer due to a temperature difference».

- On page 303, this same Q is used in the alleged statement of «the energy principle».

- On page 483, we find the «definition of entropy S».

- On page 487, we find the «definition of temperature T» in terms of entropy and energy.

- On page 488, we find the «entropy change

associated with small Q», namely

ΔS = Q T (12)

There is a serious inconsistency somewhere here. You could arrange to make any one of those statements true, and maybe even two or three of them, but not all of them at the same time.

Consider a situation where Q is zero, in the sense of page 301, i.e. no thermal transfer of energy. If we plug this same Q into equation 12, we find that ΔS must be zero (assuming nonzero temperature). In other words, the entropy must be constant. However, we know that’s not true, because there are lots of ways that entropy can increase without being transferred across a boundary. The falling coffee filters on page 311 are an example. The case of a block rubbing against an identical block is an example where it is even more obvious that no «heat» is being transferred, even though the entropy is increasing. It’s obvious by symmetry.

The fundamental problem is that in physics, there are multiple inconsistent definitions of «heat». The book gives one of the definitions on pages 301–302, but then mixes in the others without warning, without explanation.

The simplest way out of this mess is to explain that the ΔS in equation 12 is only one contribution to the total change in entropy. There are other contributions as well. This is quite a significant statement, especially given that in classical (pre-1898) thermodynamics, equation 12 was used to define entropy.

For more perspective on this mess and how to avoid it, see reference 12.

In any uncramped situation, i.e. in any situation where it is possible in principle to build a heat engine, it is provably impossible to define a state function that quantifies the «thermal energy». Calling it «thermal energy» instead of “heat” or “caloric” does not help, not even a little bit. For example, suppose I have one liter of nitrogen gas at STP. How much «thermal energy» does it contain? How much nonthermal energy? I have no idea how much, because the question is ill-posed.

Everything that chapter 7 (“Internal Energy”) says about «thermal energy» content will have to be unlearned as a prerequisite for getting through chapter 13 (“Gases and Engines”).

|

On the previous line in the text, Δy/Δx is indeed a fraction, and obeys the usual rules for fractions.

However, dy/dx is pronounced “dx by dy” not “dx over dy” and is not really a fraction. It is written in a way that looks like a fraction, but technically it is not dy divided by dx. The only thing dy by itself could possibly refer to is the exterior derivative, and dy/dx is not the ratio of two exterior derivatives. It is best to consider dy/dx as an invariable idiomatic expression. It doesn’t really have a numerator or a denominator, so it doesn’t make sense to stick “F” in the supposed numerator.

Specifically, rather than

|

it would be better to factor out the F before passing to the limit of Δ→0.

|

The same issue arises again at the top of page 305.

Counterexample: betatron. See reference 13.

Alas, there are two inequivalent definitions of photon in common use in the physics community. The definition given here is not wrong, but it is not the whole story.

- A photon is an excitation of a particular mode of the EM

field. Each mode of the field is a harmonic oscillator, and as such

the energy states are quantized, and the quantum number N is called

the photon number.

Any such photon is necessarily monochromatic.

- A photon is a localized wave packet. Typically one imagines

a Gaussian envelope on top of a running-wave carrier.

Any such photon is necessarily not monochromatic. If it is localized, it can’t be monochromatic.

Note that there are many macroscopic systems where quantum effects play a prominent role. See e.g. reference 14.

That’s either wrong or highly misleading. It is in fact routine to find systems in states that do not correspond to any of the quantized “energy levels”.

For starters, every sinusoidal voltage you’ve ever seen – and the sinusoidal motion of every oscillator you’ve ever seen – corresponds to a coherent state, not a definite-energy state. For more about coherent states, see reference 15.

As another example, every atomic transition has some nonzero linewidth. This tells us that every state (except possibly the ground state) corresponds to a range of energies.

Similar words apply to quantization of angular momentum; see item 56.

Meanwhile, the momentum part of this is exceedingly weird. It’s like saying the black eagle shrieked at dawn, and therefore we can use conservation of momentum. My point is that that momentum is always conserved, period. There is no need for any shaggy dog stories or any other preconditions for applying conservation of momentum.

I assume a major purpose of the course is to teach students to think clearly. This exceedingly muddled reasoning is unhelpful. See also item 18.

Similarly, the «gravitational interaction of two asteroids» is an infinite-range interaction.

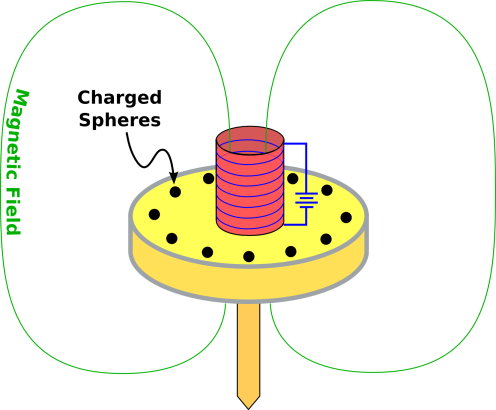

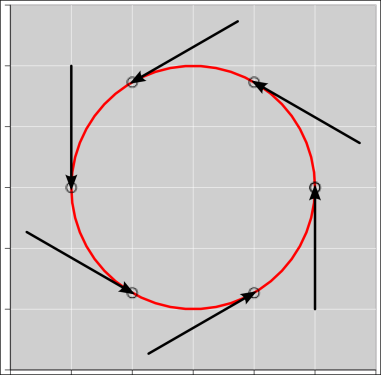

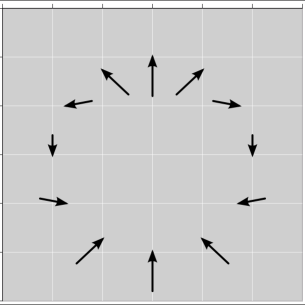

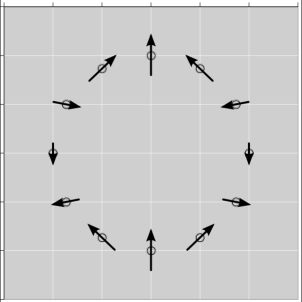

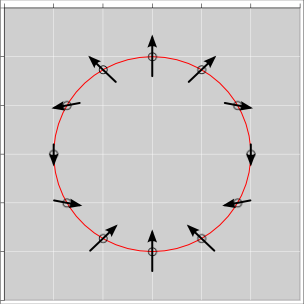

Suggestion: Choose some better examples, such as a small iron ball being scattered by the magnetic field in the gap of a large magnet, such as the one shown in figure 6. Given suitable initial conditions, the ball is deflected, even though it does not hit the pole pieces or any other part of the magnet. With skill you can arrange that it des a loop-de-loop and then emerges without being captured, such that the ball’s momentum vector rotates by slightly more than 360 degrees.

In the next paragraph it mentions the equatorial bulge, and mentions tides, and says that «over many thousands of years» these can lead to noticeable effects. I suppose that is true as far as it goes, but it is nowhere near being a sufficient correction to the errors in the previous paragraph. An accurate map is all you need to detect that gravity does not point toward the center of the earth; you do not need to wait thousands of years. See reference 7.

Similar errors are discussed in item 24.

When the Bohr model was first proposed, 100 years ago, it was better than all competing models. However, for 85 of those 100 years, the Bohr model has been out of date. There is no good reason to mention it at all. Much better models are available. Everything in this section will have to be unlearned as a prerequisite to understanding anything useful about quantum mechanics in general or atoms in particular.

Suggestion: If you feel the urge to talk about the Bohr model, lie down until the feeling goes away.

Of course it is important to have “some” model of how atoms work, but it would be a tremendous mistake to think there is a choice between the Bohr model and nothing ... or between the Bohr model and some prohibitively complex alternative.

Constructive suggestions include:

- Perhaps the best advice is to get a circular tub or pool of water and practice setting up various wavefunctions.

- You can also study waves on a string, which is a good model for shedding light on some of the things that atomic wavefunctions do.

- There are also computer models that use dot-density animations.

- There are also computer models that use color-coded phase and amplitude.

- If you feel obliged to mention the constant known as the Bohr radius, you could derive it using a particle-in-a-box argument. See e.g. Feynman volume III page 2-6. The effort involved for writing down the particle-in-a-box wavefunctions is less than for writing down the circular Bohr orbits. It is no less correct, and is incomparably better as a foundation for further developments.

- Instead (or additionally), you could model the atom as a harmonic oscillator, i.e. as a charged mass on a spring. Then talk about the states of a quantum harmonic oscillator. Once again, this would be far better than the Bohr model as a foundation for further developments (including phonons, photons, et cetera). Also, this would be very much more in line with everything else in the book, starting with the iconic ball-and-spring model on the cover.

- Et cetera.

Several of these suggestions are discussed in more detail in reference 17.

The Bohr model doesn’t explain anything ... not anything worth knowing anyway. It successfully predicts a hydrogen energy spectrum that agrees with experiment, but this agreement is essentially fortuitous. Specifically, there is nothing in the Bohr model to explain why makes some correct predictions for a 1/r potential, and for nothing else. If you apply the Bohr model to 92 different elements, you get more than 91 incorrect predictions. Even for hydrogen, it gets the wrong answer for the angular momentum of the ground state. It also gets the optical selection rules all wrong.

It seems like a poor use of resources to focus attention on the Bohr model, to the exclusion of other models that are simultaneously simpler and more informative.

Sorry, no, that is neither predicted by quantum-mechanical theory nor confirmed by experiment. Just the opposite. See e.g. reference 15.

This issue has been discussed since Day One of quantum mechanics. Planck used quantization of energy as an Ansatz, as a hypothesis. The fact that it led to a formula that was consistent with experiment does not prove that the hypothesis is correct, nor does it rule out the possibility that other hypotheses would lead to the same formula. Planck was well aware of this, and emphasized the point. A lot of people thought he was being overly cautious, but it turns out he was completely right. Energy is not necessarily quantized, and there are lots of other ways of getting the correct black-body radiation formula.

The most general expression for the entropy is the quantum statistical mechanics expression

| (15) |

which gives the same result in any basis. The energy-eigenstate basis is no worse than any other basis, but it is no better. In Planck’s day the formalism was nowhere near capable of expressing this result clearly, but it is now. The result is sensitive to the number of states in the basis, but not to which basis you use. The number of states is unchanged by a change of basis.

Suggestion: Rather than falsely saying that the energy is quantized, it would be better to say that statistical mechanics requires us to be able to count how many basis states there are in a given basis. The energy-eigenstate basis is by no means the only possible basis, but it is convenient for the present purpose.

So the argument leading to equation 475.37 is wrong, and the equation itself is wrong. The only good thing is that the equation is irrelevant; it is not needed for the further development of the subject.

- The combinatorial calculation on the next page can be done equally well with coins or spin systems, where the states are easy to count correctly.

- The proof that equilibrium is isothermal does not require a globally linear distribution of energy levels – and it’s a good thing it does not. All it requires is some local density of states, and as the smart-aleck saying goes, to first order everything is linear. The energy-eigenstate basis is particularly convenient for this application, but even so, the fact remains: energy states are not the only states; they are not even the only basis states.

Entropy has to do with distributing the probability (not «energy»). Entropy is well defined, even if the energy is unknown, irrelevant, and/or zero. Example: Shuffling a deck of previously sorted cards increases the entropy, for reasons having nothing whatsoever to do with energy. The τ2 processes in NMR provide a well-known physics-lab manifestation of the same idea.

Furthermore, entropy has to do with distributing the probability among the microstates in some abstract high-dimensional space (not «objects» in space). The canonical example is two identical blocks (or flywheels) rubbing against each other. Entropy increases, even though it is obvious by symmetry that no energy was exchanged. See reference 12.

| (16) |

Sorry, alas, that is not the proper definition. It has been known since 1898 that it is wiser to define:

| (17) |

Then it takes only one line of algebra to derive equation 16 as a corollary, valid only if all the accessible states are equiprobable. For a discussion of how this works and why it is very important, see reference 18.

I am quite aware that “S = k log W” appears on Boltzmann’s tombstone, but I’m pretty sure he didn’t put it there. He knew better. In any case, not everything you find carved in stone is guaranteed to be correct.

We need the second law to be a fundamental law. It is not sufficient to describe the «most probable» behavior. Is there a lesser probability that the entropy will be unchanged? Is there a lesser probability that the entropy will spontaneously decrease?

Similarly, we need the fundamental law to apply to all systems, not just non-equilibrium closed systems. For starters, the law needs to cover reversible flow of entropy between two systems at equilibrium. It also needs to cover nearly-reversible flow between two systems nearly at equilibrium.

It would be just fine to state a corollary that applied to closed systems, but it should be called a corollary and not be passed off as a fundamental law.

The connection between entropy and disorder is a pernicious misconception. It is important to keep in mind that entropy is a property of the distribution, a property of the ensemble, a property of the macrostate. In contrast, disorder (to the extent it can be defined at all) is a property of the microstate.

A system that is any particular known microstate has zero entropy, even if the microstate looks “disorderly”.

This is all the more significant because many students have already been exposed to this misconception. Reinforcing the misconception is unhelpful.

The idea of holding «everything» else betrays a profound misconception about how partial derivatives work. This is a very common misconception, resulting from how the idea of partial derivative is introduced in math courses ... but still we should not perpetuate and propagate the misconception.

That’s not true, not even roughly. If you want a familiar counterexample, consider a diatomic gas at ordinary temperatures.

The converse would be true: If you change the energy levels while preserving the occupation numbers of corresponding states then the entropy is unchanged. However, the converse is not the same thing, not even approximately.

| (18) |

Using basic algebra we can rewrite this as:

| (19) |

The thoughtful student will be confused by the fact that S is a function of state and T is a function of state ... so one can infer that the RHS of equation 19 is a function of state, or rather a function of the two states (1) and (2). However, alas, this equation is not true. Its LHS is not equal to its RHS. The LHS is not a function of the two states (the initial and final states). In fact it is a functional of the path, depending on every detail of the path Γ connecting the initial and final states.

Telling students once or twice that Q is not a function of state is not good enough. The students need a workable set of ideas to replace their wrong preconceptions about Q. Most of all they need to be protected from things like page 488, which strongly reinforces wrong ideas.

Suggestion: In this case, an astonishingly simple repair can be made.

| (20) |

I mean seriously, if equation 18 is going to state the requirement for nearly-constant T, we should state the requirement for constant V, which is at least as important.

This allows us to write E on the LHS. Now all three variables in the equation – E, T, and S – are functions of state. There should be no question about what they mean. By way of contrast, there are endless holy wars about what Q is supposed to mean. Writing TdS and PdV makes the whole issue go away.

Just as importantly, by stating that the process moves along a contour of constant V, we lay the foundation for a proper understanding of how partial derivatives work. The rule should be (especially in the introductory class) to never write a partial derivative without explicitly indicating what is being held constant. The same rule applies to finite differences, when they are being used as a euphemism for partial derivatives, as in equation 18.

A similar issue is discussed in item 74. This is part of a larger problem, as discussed in section 2.1.

It is a basic rule of English that a sentence should express a single idea. The quoted sentence would be just fine without the parenthetical remarks about units. If you want to add a lesson about units, it should be in a separate sentence.

Furthermore, the laws of physics, when properly stated, are independent of the units of measurement. The parenthetical interpolations teach the wrong lesson about this.

In reality, Planck’s constant is called the quantum of action (not the quantum of energy). The thermodynamic evidence supports the idea that there is a certain number of basis states per unit area in phase space. We are not obliged to choose energy eigenstates as our basis states. Furthermore, no matter what basis is chosen, the basis states are not the only states.

In reality, the point of a heat path is that its temperature does not change very much, even though the energy does change.

This is significant, because the quoted sentence is fatal to the objective of the whole section, namely the derivation of the Boltzmann distribution.

That is true if the large system is very cold, and not otherwise. We know this by direct application of the definition of temperature.

The quoted sentence is yet another fatal blow to the argument that needs to be made to fulfill the objective of the whole section, namely the derivation of the Boltzmann distribution.

| (21) |

I would suggest writing it in this form. The equations that currently appear on the page make the result seem more complicated than it really is.

If equation 533.66 were to be applied to the adiabatic phases of a Carnot cycle, we would conclude that the energy was constant during these phases, which would be quite wrong. This is part of a larger problem, as discussed in section 2.1.

The student has no way of figuring out what restrictions apply to the statements in the book. Indeed the title of the chapter refers to “Engines” without restriction – seemingly not limited to heat engines. See also section 2.1.

I would strongly recommend rewording the paragraph, so that rather than redefining QL and QH, it merely considers the case where QL and QH are both negative. This would require flipping the signs of QL and QH in equation 541.65 and a couple of related equations in the following paragraph. This would be consistent with centuries-old proper algebraic methods.

The argument starts with some unrealistic assumptions and proceeds by specious arguments to an absurd conclusion. The fact that the observed power-plant efficiency is anywhere near the predicted value is little more than a numerological coincidence. The discussion utterly fails to consider more plausible explanations for the observed efficiency. For details on this, see reference 20.

It is true that the net amount of charge on a typical object might be 15 orders of magnitude smaller than the total number of atoms in the object ... but the electrical interaction is so strong that this charge cannot be neglected.

For an overview of the key properties of real insulators, see reference 21.

- Static electricity is allegedly explained by molecules that «can be broken fairly easily» (page 587).

- Static electricity is associated with insulators such as «glass» and «silk» (page 587).

- Insulators have «molecules that do not easily break apart» (page 586).

The sad thing is that the double-talk is unnecessary. The physics of contact electrification is simultaneously simpler and far more interesting than the story that is being told in the book.

In particular, consider the conjecture that «It may be significant that almost the only materials that can be charged easily by rubbing are those that contain large organic molecules, which can be broken fairly easily.» On the one hand, it is commendable that this conjecture is fairly clearly labeled as such, by means of red-flag words such as «may be» and «almost». On the other hand, it is not a successful conjecture. It has been known for 100 years that airborne powdered metals readily pick up a treeeemendous charge. This effect is sometimes exploited on large scale, in the mining / refinining industries.

I see no way that a naïve student – or even a PhD physicist – could read that and come away with any understanding of the situation.

First of all, some rhetorical questions: Which is it: Molecular breakage, or electron transfer? If there is an explanation, why not give the explanation, rather than just asserting that one exists? When I run a plastic comb through my hair, does that cause more molecular breakage than some other kind of comb? If molecules are being broken, what happens to the broken pieces? Do they build up on the surface? Last but not least, isn’t it unduly circular to say that «electron transfer» provides an explanation for charge transfer?

I don’t know what this is, but it isn’t good physics, and it isn’t good pedagogy.

Furthermore, the basic mechanism of contact electrification is not the subject of current research. There have been scholarly papers on the subject for 60 years that I know of, and possibly longer. See reference 22 and references therein.

First of all, it is not good writing to mention new ideas for the first time in the «Summary and Conclusions».

More importantly, it would be better to change that to read something like

Presumably you have noticed that the amount of charge can be positive, negative, or zero.

That has the advantage of being right instead of wrong. There are definitely not two kinds of charge. If there were, we would need two variables: one to keep track of the “resinous electricity” and another to keep track of the “vitreous electricity”. In fact, we keep track of a single charge variable, which can be positive or negative. For details on this, see reference 23.

This was figured out by Watson (1747) and independently by Franklin (1747). Indeed, Franklin introduced the terms “positive”, “negative”, and “charge” for precisely this reason, to indicate a surplus or a deficit of the one type of electricity. See reference 24.

Rapidly? Really??? That’s a misconception, or at the very least it is unduly open to misinterpretation. In fact, the charge-to-charge interaction is a long-range interaction. Strictly speaking, it is an infinite-range interaction.

See also item 82.

- The second-best option would be to replace Q with |Q| in about a dozen places.

- The cleverer option would be to write an equation for the

vector E→ in about a dozen places where the magnitude E now

appears. For example, the scalar equation 648.84 becomes the vector

equation

E→ = 1 4πє0 Q r2 r^ for r > R (22) Similarly, the scalar equation 649.72 becomes the vector equation

E→ = Q/A 2є0 x^ sgn(x) (23) where sgn(x) is equal to +1 when x>0 and equal to −1 when x<0.

Tangential remark: I don’t expect the students to know about exterior derivatives at this point ... but if they did, there would be a more elegant way of writing the unit vectors in these equations. The scalar equation 648.84 becomes the vector equation

E→ = 1 4πє0 Q r2 d→r for r > R (24) Similarly, the scalar equation 649.72 becomes the vector equation

E→ = Q/A 2є0 d→|x| (25)

Suggestion: At the beginning of chapter 17 and perhaps several other places, put a super-prominent warning:

This is a manifestation of the “limitations” issue, as discussed in section 2.1.

Alas, the “derivation” is bogus and the claim is wrong. We know it is wrong because energy is always conserved, but ΔV is not always zero. In fact, ΔV is routinely nonzero in AC systems. A betatron is a spectacular example. Transformers and ground loops are perhaps more familiar examples. There is energy in the field, and a moving charge can extract energy from the field.

Any correct derivation of ΔV = 0 would have to require the DC limit (or some other drastic restriction), which the “derivation” in the book does not. On the other edge of the same sword, once you require the DC limit, there is no need to mention conservation of energy; the Faraday-Maxwell equation suffices. So the claim on page 684 is wrong coming and going.

The same bogus claim appears on page 768.

- Nowhere does it say – so far as I can tell – that we are dealing with a long, straight wire ... although the diagram tacitly suggests this. If the wire is not long and straight, none of the claims made about the magnetic field are true.

- On the other hand, if the wire is long and straight, the magnetic field must be perpendicular to the wire everywhere outside the wire (not just «directly under the wire»).

- It may be that n is a dimensionless number that gets

multiplied by electrons per m3 to get a density, and similarly

A is a dimensionless number that gets multiplied by m2 to

get an area, and so on for the other variables in the equation.

If so, this is bad practice that should not be taught to students. It is a style of dimensional analysis that has been out-of-date for about 100 years. It went out of style because it is unduly clumsy, laborious, and error-prone.

Furthermore, it is inconsistent with (almost) all other equations in the book, including other equations on the same page. Therefore, hypothetically, even if you decided to show this to students, it should not be sprung on them without warning; it would need to be explained in detail.

- It may be that n, A, v, et cetera represent dimensionful quantities, in accordance with the conventions used (almost) everywhere else in the book – and (almost) everywhere else in science and engineering. In this case, equation 717.51 is some weird fugue, trying to do two calculations at once.

No matter what the etiology, the cure is the same: This equation needs to be broken into two equations: One does the calculation in terms of normal, dimensionful quantities. The other performs a check on the dimensions.

- It may be that all three variables on the RHS are dimensionless, and then we multiply by «(number of electrons per second)», thereby making the variable i on the LHS a dimensionful quantity. This is at best self-inconsistent.

- It may be that all four variables are meant to be dimensionful, and the parenthetical expression «(number of electrons per second)» merely a tangential, parenthetical remark. This is at best wildly inconsistent with the definitions and usages earlier on the page, in equation 717.51.

Again, the recommended cure is the same: Don’t try to state two ideas in the same sentence, or in the same equation. Split equation 717.91 into two equations: One does the calculation, while the other performs a check on the dimensions.

A more-or-less equivalent possibility would be to split it into one equation plus one plain English sentence that remarks upon the dimensions and/or units.

As a specific example: On page 717, rather than defining Δt to be the number of electrons per second, it would be better to define it as the number of electrons per unit time. If some person wants to choose seconds as his unit of time, that’s OK ... but other persons may choose differently.

Not coincidentally, in the definition of «electron current», the defining equation is dimensionally unsound: The RHS is a vector (because velocity is a vector) while the LHS is evidently meant to be a scalar.

The best way to understand what is going on is to consider “the” current to be a vector. The variable i that appears in the defining equation 717.91 is a scalar because it is the projection of the current-vector onto some basis. The basis vector absolutely must be indicated on the circuit diagram at every point where the current is to be measured. This is standard practice in all of engineering and science. See figure 20.27 for an example of what this should look like. In contrast, figure 18.19 is conspicuously lacking any such basis vector. To make sense of equation 717.91, on the RHS the velocity needs to be dotted onto some basis vector. In an introductory setting it is helpful to write ix (rather than plain i) to indicate the component of current flowing in the x-direction. For details on all this, see reference 25.

- Long-distance power transmission lines are flipped every so often, to minimize radiative power losses.

- Twisted shielded pairs are routinely used for instrumentation.

- Twisted shielded pairs are routinely used for communication.

Constructive suggestion: It would be better to say something like “In the DC limit, circuit analysis is based on two principles....” This is hinted at by statement of the first principle, which mentions the «steady state». Unfortunately, neither this hint nor anything else in the chapter tells us which (if any) aspects of the steady state are representative of the «general» case, so there is no support here for the «KEY IDEA».

Similarly, the statement of the second principle says «Round-trip potential difference is zero.» This is absolutely true. Indeed, one might consider it tautological, as a consequence of the definition of potential. Like most tautologies, this is not very informative. Actually it is worse than most, insofar as it suggests that thinking in terms of potentials is the «general» case.

The root cause of the problem is that all of chapter 17 taught the student that voltage is synonymous with potential, so the student is virtually certain to interpret the second principle as saying that the round-trip voltage drop is zero ... which is absolutely not true for AC circuits. Somebody with a sophisticated understanding of the subject would know that that the voltage is not a potential in this situation, but the students don’t have this level of understanding. Indeed they haven’t even been shown the vocabulary that would allow them to formulate such a statement.

In any case, there is no hint as to what the voltage=potential case tells us about the «general» case. Bottom line: the alleged «KEY IDEA» is profoundly wrong.

This is an example of the broader problem discussed in section 2.1.

Beware that in this book, expressions like «It is reasonable to expect» or «One might reasonably assume» are used to introduce misconceptions. These expressions are red flags. See e.g. page 757, near the bottom.

I say that non-entropy is what gets used up, but the book does not explain this, or even hint at it. I say that a bulb cannot create or destroy charge, because charge is conserved. I say that a bulb cannot create or destroy energy, because energy is conserved. In contrast, the bulb does create entropy.

The book says that charge cannot be used up, because it is conserved. Oddly enough, it does not apply the same logic to energy ... nor does it explain why the logic does not apply. It says energy «flows out» of the circuit. Oddly enough, it does not apply the same logic to the charge ... nor does it explain why the logic does not apply.

I say there is no principle of physics here, but rather a lot of engineering. The bulb is carefully engineered so that maximum light, minimum heat, and practically no charge flows through the envelope. The bulb is also engineered to minimize the amount of heat that flows out via the wiring, which is quite a trick, because the Wiedemann-Franz law guarantees that electrical conductivity implies a certain amount of thermal conductivity.

The book uses the phrase “used up” repeatedly, sometimes in scare quotes and sometimes not. I can’t figure out what the intent is. The phrase could be a misconception that was introduced just so it could be dispelled, or a correct idea that should be learned, or perhaps an approximation, or whatever.

Even if we think in terms of the “available energy” instead of the physics energy, some of the “available energy” that enters the light bulb is not used up, because the emitted light can be used to do useful work, and could in principle be returned to the circuit.

Setting aside the intent and focusing on the right answer: I don’t understand the strategy and structure of the discussion. The right answer is that a resistive element (such as a light bulb) cannot create or destroy charge and cannot create or destroy energy, but it does create entropy. I don’t see how students are supposed to figure that out, given that the discussion in the book doesn’t mention – or even hint at – the thing that actually gets used up. Conversely, it doesn’t make sense to frame the discussion by asking what gets used up, if the book is just going to discuss a bunch of things that are not used up.

It would be more constructive to state, at some point, the right answer. Even if you want to arrive there indirectly, so as to show the reasoning process, any sound logical argument would arrive at the right answer eventually.

Really? Outside the wire? For a bare wire in vacuum, that must mean the steering charge must be in the vacuum space. For an insulated wire, that must mean that the steering charge are somewhere inside the insulator.

That’s some seriously backwards logic. It would be OK to say that incompressibility would imply that the density is constant. However, the converse does not hold; there are lots of ways a compressible fluid could have a non-changing density.

Also note that on the previous page, i.e. page 755, it says «the mobile electron sea behaves rather like an ideal gas». You can’t have it both ways; either it’s incompressible or it’s a gas, one or the other.

As it turns out, I’m pretty sure the electron sea in (say) copper acts like a degenerate Fermi gas with a pressure in excess of 100,000 bar. I’m pretty sure it expands to fill whatever container it’s in. The fact that its density doesn’t change is not evidence that the gas is incompressible. All it really means is that the size of the container hasn’t changed, and any relevant change in the number of electrons is only a small percentage change.

Compare item 107.

This is bogus reasoning in support of a false conclusion. It is absolutely routine for metal objects to have unbalanced amounts of charge. This is where capacitance comes from, including both purpose-built capacitors and parasitic capacitances. And not all the charge is «somewhere else».

Compare item 104.

This is perhaps an application of the utterly wrong idea of incompressible Fermi gas, discussed in item 105. It is consistent with the equally-wrong idea of electrons hanging around outside the wire, as discussed in item 104.

On the other hand, it is inconsistent with the notion of “ideal” electron gas mentioned on page 755.

First of all, it is not rigorously true that there is all unbalanced charge goes to the surface of a metal. Secondly, Gauss’s law is not required in order to obtain a good understanding of how the charge behaves inside a current-carrying metal, at the level of detail considered in this chapter.

The problem is, throughout this chapter, the assumption is made that charge density on a wire is proportional to voltage. This notion is reinforced many times by the words and diagrams.

This was entirely intentional. Elsewhere the authors the authors explained this by appealing to the authority of Sommerfeld (1952) and Marcus (1941). Evidently they never checked whether the result is valid in general or only in the highly-symmetric case of a long straight wire.

This is tantamount to assuming that the wire has constant capacitance per unit length.

Many of the figure captions in chapter 19 label the charge distributions as «approximate», and the words on page 761 refer to a «very rough approximation». However, the qualitative statement at the bottom of page 760 is made without reservation, and all the figures show this qualitative trend. The problem is, it just isn’t true. Not even close.

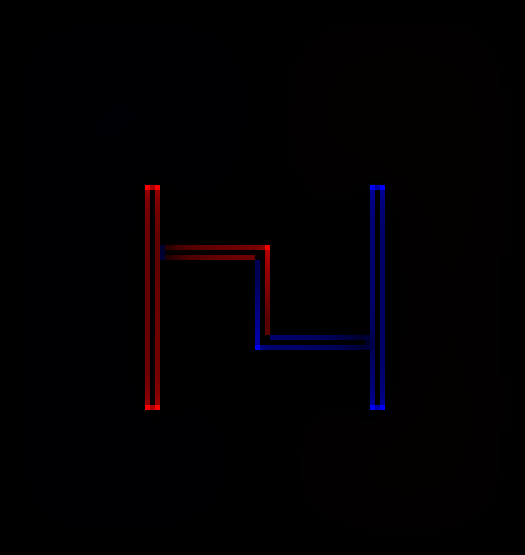

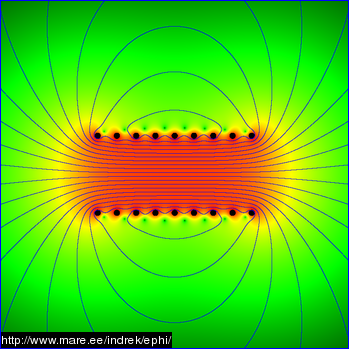

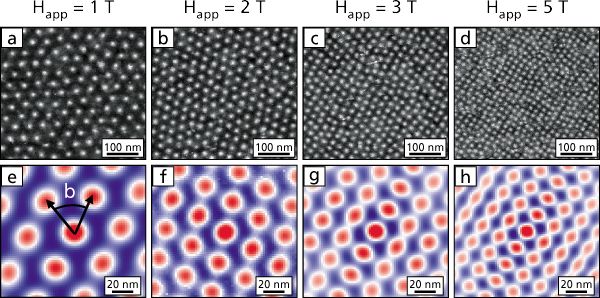

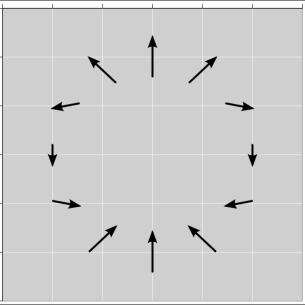

A much more realistic charge distribution is shown in figure 2.

This contrasts in many ways from what is shown in the book’s figure 19.17, and said in the book’s words.

- The real charge distribution does not decrease monotonically as we move along the wire.

- The region of greatest positive charge density is not where the book says it is.

- The region of greatest negative charge density is not where the book says it is.

- There are places along the wire where one side of the wire has a very different charge density from the other side.

Why mention mobility at all? The only thing that matters is resistivity, so why not just say “resistivity”? Throwing around microscopic physicsy terms like mobility may create a semblance of erudition, but it’s worse than nothing when the terms are used wrongly.

Why pick carbon anyway? Why not use brass or bronze or nichrome?

See also section 2.2.

- As for the pedagogy: Ideas are always more important than terminology. Giving something a name is not the same as explaining it. If we know about Coulomb forces, calling something a «non-Coulomb» force tells us what it isn’t ... but doesn’t tell us what it is.

- As for the physics, There is nothing going on inside a battery other than

chemistry. Think about the atomic Hamiltonian: there is a

potential-energy term and a kinetic-energy term. The dominant

contribution to the atomic potential is the Coulomb potential. Sure,

there is a magnetic contribution also, and it is noticeable if you

build a battery out of iron and operate it near the Curie temperature

... but even then, it is a minor correction term. The idea that any

batteries (let alone all batteries) depend on «non-Coulomb forces»

is really quite silly.

The thing that bugs me the most about FNC is that it pretends to be physics, but it isn’t. It is used in many places throughout the book, and in every case it could be replaced by some “magic demon force” Fmagic. Rather than a conveyor belt, we could draw a picture of little demons that use magic buckets to carry charge uphill from one electrode to the other. This is not a good situation, because magic is the opposite of physics. Actually, compared to the conveyor model, the demon model would make fewer wrong predictions:

- A normal conveyor belt operates at near-constant velocity; it does not impart a constant force to the cargo. This suggests that a battery should exhibit constant current rather than constant voltage. This is contrary to observations.

- Furthermore, suppose we try to fix this by imagining that the belt is moving much faster than the electrons, and imparts a force by friction, such that the force is nearly independent of the velocity of the electrons, then we must predict a great deal of frictional heating, which is quite a wrong prediction.

- The «conveyor belt» model also suggests there is a large electric field in the gap in a charged-up battery, in the gap where the electrolyte sits. This is not observed.

Main suggestion: At the introductory level, the conventional and sensible approach is to treat the battery as a black box with two terminals. The properties of the black box are given in purely operational terms, either as a zeroth-order model (voltage, as seen e.g. on page 769) or as a first-order model (Thévenin open-circuit voltage and impedance).

To say the same thing the other way: If you start talking about what’s inside the black box, talking about a «conveyor belt» and a «non-Coulomb force» or demons or whatever, that’s not physics. You’re pretending to know things you don’t.

Alternative suggestion: If you want to delve into the details, it’s not particularly hard to get the details right. It’s probably more work than most readers are willing to do, but it’s certainly doable. For a discussion of how a battery actually works, see reference 26.

As fond as I am of pointing out that voltages are not necessarily potential differences, this is a completely wrong example. If the voltage is not a potential, then there must be a loop somewhere such that the integral of the voltage around that loop is nonzero. Since this is a DC system, the loop voltage must be permanently nonzero. Then, according to the Maxwell equations, there must be an increasing magnetic field threading that loop, permanently increasing without bound. This is not observed.

This is a zeroth-order model of the role a battery plays in a real circuit. I have no objection to a zeroth-order model provided it is labeled as such. This is an instance of the “limitations” issue discussed in section 2.1.

It turns out that the sentence is trying to say something almost reasonable, but it contains at least two superficial misstatements and at least one deeper presentation / interpretation issue. Here is my attempt to figure out what’s going on here:

- For one thing, rather than talking about the «gradient» of surface charge, perhaps it meant to say the directional derivative, in the direction that runs along the wire. There is a huge difference between a gradient and a directional derivative.

- Rather than talking about “the” electric field, perhaps it meant to

say the projection of the electric field, projected along the

direction that runs along the wire. The overall electric field is

wildly different as to both direction and magnitude.

Note that this item and the previous one can be understood as two manifestations of the same issue, if you re-interpret everything in terms of what’s going on in the one-dimensional subspace defined by an idealized thin wire. Alas, this does not really solve the problem, because it is very doubtful that students will be sophisticated enough to switch to this interpretation. The text says nothing to explain or even suggest this interpretation. Forsooth, this interpretation is directly contrary to what the text says about the importance of thick wires.

- Last but not least, the text suggests trying to infer the surface charge density from the diagram. This is somewhere between difficult and impossible, because the radius of the wire is changing, and because the diagram more directly portrays amount of charge per unit length than surface density per se (charge per unit area). At any given voltage, the surface charge density is independent of radius r, but the amount of charge per unit length is proportional to r. The diagrams would be hard to interpret even without variability in the radius, and then the variability adds injury to insult, i.e. it demotes the diagram from hard-to-intepret to essentially wrong.

Suggestion: In this diagram and in dozens of others, it would be better for all the wires to have the same radius. Changing the resistivity can be accomplished by choosing different materials. This is consistent with real-world practice, e.g. substituting NiCr alloy for copper.

See also item 116.

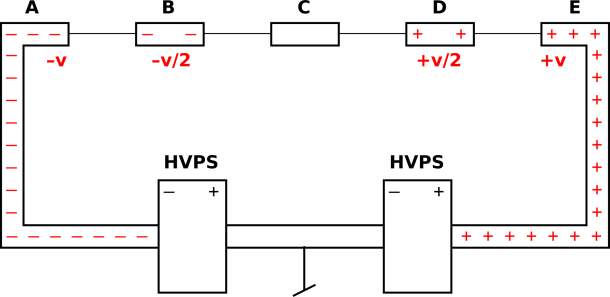

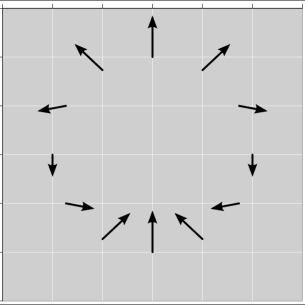

My answer is shown in figure 3. A number of simplifying assumptions have been made, notably the assumption that all the wires are small compared to the distance between wires. To obtain anything resembling a quantitatively-correct distribution would require FEM (finite-element modeling) software.

The ways in which this differs from figure 19.56 include:

- Within each segment of the large wire, let’s assume the capacitance per unit length is a constant. This is a lousy approximation, even in this simple circuit, and it is even worse for other circuits. Subject to this assumption, there is a definite charge per unit length for these segments.

- The charge per unit length on segment B is half the charge per unit length on segment A. Similarly the charge per unit length on segment D is half the charge per unit length of segment E. We know this by applying the voltage-divider formula (which is a corollary of Ohm’s law) to find the voltage, and then applying basic notions of capacitance.

- The charge per unit length on the fine wires is much smaller, because the self-capacitance is much smaller. The charge per unit length is so small it cannot be represented using the same scheme as the other charge densities. Let’s be clear: Even if we pretend the capacitance per unit length is constant for any given diameter of wire, it cannot possibly be independent of diameter.

As discussed in item 115, part of the problem is that the text speaks of «surface charge density» whereas the diagram depicts charge per unit length. These quantities differ by a factor of r. When we have wires of different r, the analysis given in the book is wrong. Indeed at this point we are two jumps removed from a reasonable analysis, because even if we took r into account, we would still suffer from the unreasonable assumption that capacitance per unit length is independent of the surroundings.

For sufficiently simplified surroundings, you can have a definite capacitance per unit length, but this has negligible practical or pedagogical value. It is misleading, because it is not representative of what happens in ordinary real-world circuits.

Specifically:

- At the start of section 20.1, the first paragraph needs to be removed. Most (perhaps all) of its content could be discarded entirely, since it is duplicative of the later discussion. Anything that needs to be preserved could be worked in later, about three paragraphs down the page. It cannot stay where it is, because it depends on assumptions, limitations, and provisos that have not yet been introduced.

- Then, the section needs to start by saying “Let us consider the scenario shown in figures 20.1 and 20.2’ or words to that effect. My point here is that much of what follows is true in that scenario but not reliably true otherwise.

- Even within the scenario, additional specificity is required. It needs to say “We have chosen the type of light bulb, the type of battery, and the type of capacitor so that the light bulb remains lit for several seconds before gradually dimming out.” My point is that this is a choice, not a law of physics.

- In the discussion of time scales, in the paragraph that

straddles the bottom of page 793, it speaks of any «circuit

containing a capacitor». This needs to be much more restricted. It

should be restricted to the circuit in this scenario.

(The contents of the paragraph that was removed can be worked in here, to the extent that they need to be worked in at all.)

- Last but not least, to show the boundaries of the primary scenario, contrasting scenarios should be mentioned: (A) If a smaller capacitor were used, the bulb would stay on for less time, perhaps many orders of magnitude less. (B) For a physically larger circuit, such as a lightning bolt or a nationwide power-distribution grid, it would take more than a few nanoseconds for the charge distribution to settle down, perhaps orders of magnitude more. (C) If we were interested in finer details of what is going on, for instance in the circuits inside a computer, where the switches are opening and closing billions of times per second, it would not be safe to assume that the settling-down timescale is short compared to other timescales of interest.

According to the laws of physics, it is perfectly possible to have the same sign of charge on both plates of a capacitor, for instance if you set the capacitor on top of a van de Graaf generator. The tradition in introductory engineering courses, as expressed by Kirchhoff’s laws, is to assume this cannot happen. However, the whole theme of chapter 20 and several other chapters is to connect the actual laws of physics to macroscopic circuit behavior. Using the same word, without explanation, for «charging» the capacitor as a whole and «charging» one plate of the capacitor pulls the rug out from under this effort.

In the real world, it is quite common to observe violations of Kirchhoff’s laws.

One cannot expect students to figure this out on their own.

The absolute value bars are not even used consistently. One example where they are not used is in the sidebar on page 803, where it claims «we always write ΔV = I R». On page 821, equation 821.60(R) omits the absolute value bars, while on the very next line, equation 821.63(R) has them. There are many other examples of this inconsistency.

Also, writing it in terms of finite differences is silly; see section 2.4.

Of course, in accordance with the “pseudowork / kinetic energy theorem” the cyclotron must have done work on the particle, but this is just another illustration of the fact that work is not a function of state. Work is not a potential.

I know that folks in the field measure every energy in electron·volts, but that’s just dimensional analysis; it does not mean there is a voltage anywhere equal to the total energy divided by e. Also the book treats voltage as synonymous with potential difference, but that is a profound misconception; in fact, not every voltage is a potential. So we are at least two jumps removed from equating the energy to a potential difference.

Simple suggestion: Just say “imparts energy” rather than “creates a potential”.

- Perhaps most importantly: There is absolutely no way a static magnetic field can do work on a point charge. This is a famous theorem. It is easy to understand and easy to prove. Hint: The force is perpendicular to the velocity.

- Let’s look at equation 851.80. I assume all the quantities this equation are scalars, in accordance with the notation used throughout the book. Now, suppose you write out the corresponding vector equation, involving things like v→×B→. Then we can easily see that equation 851.80 is nonsense. The v in this equation is the speed of motion of the bar, as it moves in the x direction ... whereas the physically-correct equation would use the speed of the electron. Note that if it is to have any chance of moving from one end of the bar to the other, the electron must have some velocity component in the y direction. This y-velocity turns out to be a crucial part of the right answer.

- When we consider conservation of energy, we find yet another difficulty. Energy must be supplied to the system from some external source, by something pushing on the bar. This is a force in the x direction ... even though the force implicitly implicated in equation 851.80 is in the y direction.

- Equation 851.80 can be thought of as the right answer to a

different question. There is in fact a voltage v B L between the

two rails. It becomes an interesting physics question why an utterly

wrong derivation produces a correct formula for the voltage. It’s

probably just dimensional analysis in disguise; you can mention

wrong physics or no physics at all, and still obtain equation

851.80 (or something similar) by dimensional analysis.

We have several non-wrong ways of knowing the voltage:

- Dimensional analysis tells us that v B L must be at least roughly right.

- The Faraday-Maxwell law of induction says V = dφ/dt. The voltage is the time derivative of the flux.

- If you insist on doing the calculation in the lab frame, the true physics involves at least two additional steps that page 851 does not even hint at. (On the other hand, the exceptionally astute student might realize that the argument on page 848 could be applied – in reverse – to shed some light on this problem.)

- The elegant way to proceed is to transform into a frame comoving